Method Overview

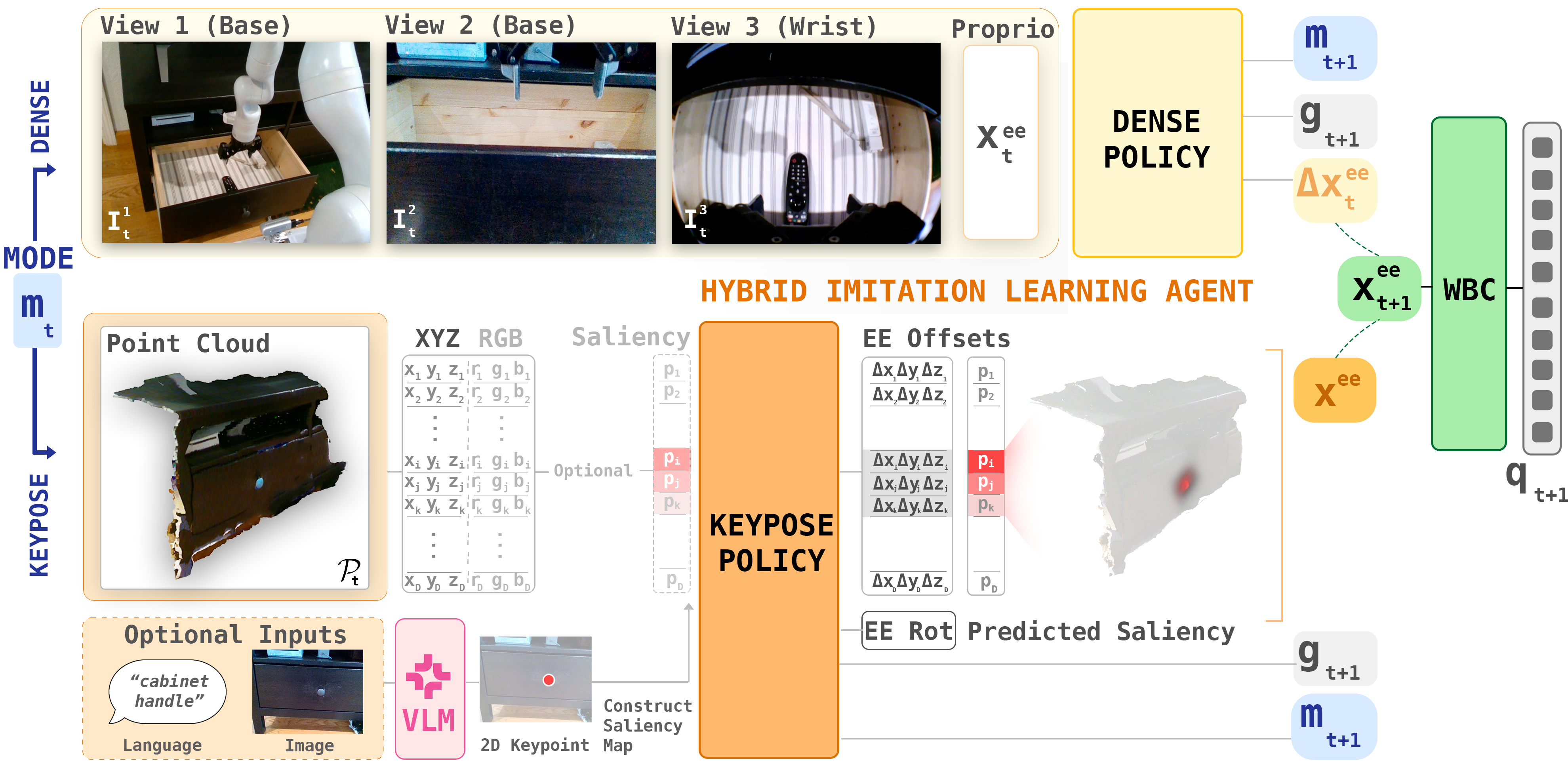

Using the data collected and annotated above, we train HoMeR, a framework that

combines a hybrid IL policy with a whole-body controller for execution:

- Keypose Policy: Uses third-person point clouds to predict 6-DoF end-effector poses and next control mode for long-range motion. Rather than directly regressing a target pose, the policy learns to predict a task-relevant salient point in the scene and outputs a relative offset from this point to the desired position. Separate learnable tokens predict end-effector orientation, gripper state, and control mode. The policy can optionally be conditioned on an externally specified salient point—e.g., from a vision-language model—for dynamic and interpretable goal specification (HoMeR-Cond).

- Dense Policy: Diffusion Policy which uses RGB images (third-person and wrist) to predict relative 6-DoF delta actions for fine-grained manipulation once near objects.

- Whole-Body Controller (WBC): Converts end-effector actions into joint commands for the mobile base and arm, enabling smooth, constraint-aware execution.